Neural Search Integration in Web Applications: Transforming How Users Discover, Explore, and Engage

- Why Traditional Search Is No Longer Enough

- What Is Neural Search?

- How Neural Search Works

- Tools and Technologies Powering Neural Search Integration

- Benefits of Neural Search in AI-Based Web Applications

- Use Cases of Neural Search in Web Applications

- Implementation Guide: Adding Neural Search to a Web Application

- Challenges and Best Practices

- The Future of Neural Search

- AI Web Applications as Intelligent Ecosystems

- Conclusion

- About iProgrammer

The web has changed. What used to be a straightforward search for information has become a path to discovery, where each click, each question, and each interaction has purpose—and anticipation. It has become a matter of engagement versus abandonment based on comprehension not only of the words (Keywords) a user enters but of what they intend.

Traditional search once served its purpose. But today, businesses need platforms that do more than retrieve. They need platforms that interpret, anticipate, and respond with intelligence. This is the promise of AI web applications: systems that don’t just store content, but understand it, and deliver experiences that feel natural, effortless, and deeply human.

At iProgrammer, we’ve closely watched this evolution. The transition from static search boxes to smarter discovery layers is remapping digital experiences in industries. And leading that change is Neural search, a technology that’s remodelling how human beings interact with information, and companies connect meaningfully with their audiences.

Why Traditional Search Is No Longer Enough

Keyword-based search was built on exact matches and lexical logic. It looked for words, not meanings. Type “running shoes for hiking”, and a traditional system might miss results labelled “trail sneakers.” The intent is there, but the words don’t fit, and the outcome doesn’t show.

The model breaks down even more in the instance of multilingual content, synonyms, or ambiguous words. Search engines respond to what’s typed, not to what’s meant. In a world where users expect experiences as intuitive as their thoughts, this is no longer enough.

Neural search changes that paradigm. Instead of matching characters, it interprets relationships. It captures the semantic essence of a query. The underlying meaning behind words and uses that to surface results.

Think of it as the difference between reading a dictionary and understanding a conversation. The former lists possibilities; the latter grasps context. Neural search is that conversational understanding, translated into code.

What Is Neural Search?

Neural search applies deep learning to translate words, sentences, or documents into mathematical abstractions known as embeddings. These embeddings exist in high-dimensional space, where closeness indicates similarity of meaning but not necessarily the same wording. For instance, the terms “cheap flight deals” and “affordable air tickets” have embeddings that are located near to one another, since they convey the similar intent.

This concept allows web applications to transcend the boundaries of literal text. With neural search, a platform can answer a query like “eco-friendly laptop for developers” by recognizing that the user cares about both performance and sustainability, not simply those exact keywords.

Behind the scenes, the embeddings are kept and updated in vector databases—systems that can efficiently deal with millions of such high-dimensional representations. Well-known alternatives are Pinecone, Weaviate, FAISS, and Milvus.

Models such as OpenAI Embeddings, BERT, and Sentence Transformers embed models that power this ability. They are fed huge sets of data to learn about language patterns, tone, and context.

How Neural Search Works

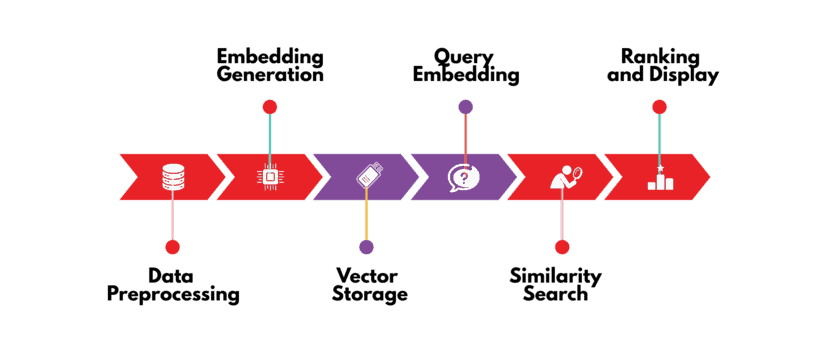

Neural search appears to be simplicity itself on the surface: a user enters a question, and the system produces appropriate answers. But beneath the surface, it’s a complex dance among language, math, and smarts. To understand why it seems nearly magical, it helps to trace out the path that a question takes in a contemporary AI web app.

Data Preprocessing: Each text—product description, article, FAQ—is gradually cleaned and standardized. It’s not merely about correcting typos or punctuation; it’s about structuring the data for the system to interpret context, subtleties, and significance.

Embedding Generation: After preparation, each segment of text is converted into a numerical representation, or vector, using a pre-trained model. These vectors preserve the content of the text—the ideas and relationships it expresses—instead of the individual words. This allows the system to “see” similarity even when the language differs.

Vector Storage: The embeddings are subsequently kept in a vector database like Pinecone, Weaviate, or FAISS. Envision this as an exceptionally intelligent library where each book is identified not by its title, but by its representation.

Query Embedding: When a user performs a search, their query is converted into a vector through the same procedure. This creates a mathematical snapshot of intent, ready to be compared against the database.

Similarity Search: The system then computes distances from the query vector to stored vectors, usually computing cosine similarity. The smaller the two vectors, the more semantically identical they are. That is the way a search for “eco-friendly laptops” can find results labelled “sustainable notebooks for developers” without needing to match exactly.

Ranking and Display: Finally, results are organized by relevance and shown to the user. The system reveals what genuinely matches the intent, rather than merely showing results with similar keywords.

What makes this remarkable is the bridge it creates between human thought and computational understanding. For developers, integrating neural search is a study in precision and architecture; for users, it feels intuitive, almost like the system can read between the lines. Queries that might have returned empty or frustrating results in traditional search now deliver meaningful, context-aware answers—every time.

Tools and Technologies Powering Neural Search Integration

A great search experience starts with the right stack. Every piece—embedding model, database, framework—works together to turn queries into meaningful results.

Embedding Models

Models like OpenAI Embeddings and Cohere capture general meaning across text. For more specialized tasks, BERT and Sentence Transformers understand domain-specific language and nuance. These models let web applications grasp intent, not just words.

Vector Databases

Embeddings need a home. Pinecone and Weaviate scale effortlessly in the cloud, while FAISS and Milvus handle high-performance local or hybrid setups. They make searching millions of vectors fast and precise.

Integration Frameworks

LangChain and Haystack connect embeddings with application logic, while Elasticsearch and Vespa extend traditional search with semantic understanding.

Languages and Web Frameworks

Backend processing is usually done using Python or Node.js, and front-end experiences are developed using React, Django, or Flask—to make the system responsive and intuitive.

Architecture Considerations for AI Web Applications

Building neural search into a production-grade web application requires more than picking the right embedding model or vector database. Architecture matters. Elastic data workflows, scalable vector storage, and resilient API layers create a foundation for a system capable of delivering real-time, context-sensitive outcomes at scale.

Microservices or modular architectures allow teams to iterate on embeddings, retrain pipelines, or front-end logic without having to rewrite the entire platform. While caching strategies, batch processing, and asynchronous updates keep performance snappy even with heavy query loads.

Benefits of Neural Search in AI-Based Web Applications

In an AI-based web application, neural search becomes the quiet intelligence that powers engagement.

- Context-Aware Search: It comprehends meaning, not structure, ensuring that users receive the most pertinent results even with vague wording.

- Multilingual Understanding: A search in one language can produce results in a different one—surmounting language obstacles.

- Personalization: Embeddings can be developed based on user actions and past interactions, enhancing outcomes for specific preferences.

- Enhanced Discovery: E-commerce applications use it for intelligent product recommendations; media platforms utilize it for tailored content.

- Real-Time Retrieval: CRMs and knowledge bases can quickly bring related documents or customer information to the top.

For companies, these abilities equate to quantifiable results—increased engagement, shorter bounce rates, and longer session times. For visitors, it equates to relevance that does not require effort.

Searching is not merely about retrieving information—it’s a conversation. Neural search allows web applications to anticipate user intent, uncover relevance, and reduce barriers between a user’s needs and the system’s offerings. When done correctly, it transforms the search bar from a mere function into an experience that feels natural and almost effortless.

Consider e-commerce, for instance: consumers frequently lack the exact terminology for a product. Neural search captures their intent, presenting results that align with meaning, not just syntax. In enterprise knowledge portals, it helps employees discover critical insights buried in documents they may never have indexed manually.

Ultimately, designing search for meaning bridges the gap between technology and human expectation. It ensures that relevance is not only measurable in clicks but felt in every interaction.

Use Cases of Neural Search in Web Applications

Neural search isn’t just a concept—it’s actively reshaping how industries connect users with the information they need.

E-Commerce

Retailers go beyond simple keyword matching. Searches such as “lightweight laptop for traveling” or “winter gear for the outdoors” now bring forward products that match the purpose behind the keywords. Consumers discover what they are looking for quicker, increasing interaction and sales.

Enterprise Portals

Companies with huge internal knowledge stores use semantic search to bring employees the appropriate information. Contextual retrieval eliminates hours of manual digging, enabling groups to make improved, quicker decisions.

Customer Support

Help desks powered by AI link user issues to relevant guides, FAQs, or past ticket solutions. It creates a more natural, self-guided experience with decreased wait times while maintaining a human element.

Media and Streaming

Sites suggest content—programs, music, or articles—based on context, current activity, and user interests. The outcome is a personalized, curated experience that engages people longer.

Healthcare

Medical facilities ask knowledge bases semantically, with relevant research articles, patient information insights, or diagnostic protocols fetched. This enhances decision-making, speeds up research, and enables better patient care.

Implementation Guide: Adding Neural Search to a Web Application

Building neural search into your web app requires both engineering precision and design sensitivity.

1. Define Your Objective

Determine the purpose: product discovery, document retrieval, or content personalization. This guides model selection, data prep, and interface design.

2. Choose an Embedding Model

Pick a model that fits your domain. OpenAI Embeddings work for general use, while BERT or Sentence Transformers excel for specialized content. The goal is capturing intent, not just matching keywords.

3. Prepare and Clean Data

Clean, normalize, and format your text. Properly prepared data guarantees embeddings reflect meaning and context.

4. Generate and Store Embeddings

Embed text into vectors and keep them in a vector database such as Pinecone or FAISS. Cost-effective indexing allows for speedy, accurate retrieval even at scale.

5. Build Query APIs

Set up endpoints to handle user queries, transform them into vectors, and return ranked results. Include keyword-based fallback for robust coverage.

6. Integrate the Front-End

A good integration is transparent to users but revolutionary for their experience. That equilibrium—between engineering and empathy—is what distinguishes good AI design.

Challenges and Best Practices

Neural search, although potent, has its own set of challenges.

Scalability:

Indexing and retrieval of millions of embeddings require effective vector indexing and retrieval techniques. Preparing for horizontal scaling guarantees that performance stays stable as data increases.

Latency:

For similarity searches in high-dimensional space, low latency is required to seem instantaneous. Enhanced indexing and caching techniques help maintain a seamless user experience.

Data Freshness:

As content evolves, embeddings must be re-generated to reflect updated context. Automated pipelines for periodic updates guarantee that the outcomes stay correct and pertinent.

Ethical & Privacy Considerations:

Search systems learn from data. It is essential to guarantee compliance, fairness, and transparency. Data anonymization and appropriate management preserve user confidence while enabling insights.

Best Practices:

- Combine neural and keyword search for hybrid relevance. This ensures edge cases or rare queries are still handled effectively.

- Continuously monitor retrieval quality. Regular audits help maintain consistent relevance and performance.

- Optimize hardware and caching for real-time performance. Efficient resource management avoids bottlenecks under high traffic.

- Be transparent about data usage. Transparent communication regarding the data being utilized and its application enhances user trust and adheres to regulatory requirements.

Human Oversight in AI-Driven Search Systems

Even the most advanced AI systems benefit from human oversight. Embeddings can capture meanings and relationships, but they may miss subtleties like tone, intent, or emerging trends. Adding review loops, curated validation, and quality monitoring helps ensure results stay in sync with both business goals and user expectations.

Feedback mechanisms can also train models over time, minimizing semantic drift and enhancing relevance. At scale, these monitoring practices turn a technically proficient search system into an intelligent one—where learning, governance, and adaptation coexist effortlessly.

The horizon of neural search extends far beyond text. The future is multimodal discovery—where text, images, audio, and video coexist in a single understanding system.

Imagine asking a platform, “Show me interior designs that match this photograph,” or “Find tutorials related to this code snippet.” These are not distant scenarios—they are already taking shape.

Voice-driven and generative interfaces are next. Users will converse with web applications naturally, combining search, chat, and generation into a unified experience.

As AI web applications evolve, neural search will become their interpretive core—the element that understands not just what users say, but what they mean to find.

Forward-thinking companies are already embedding this intelligence layer today, ensuring their platforms stay relevant in tomorrow’s discovery-driven web.

Neural search is not an isolated feature—it’s the connective tissue of modern AI web applications. As platforms evolve, search will integrate with recommendation engines, personalization layers, and multimodal intelligence systems. Text, images, and audio will coexist within unified embeddings, allowing applications to anticipate needs, adapt interfaces, and deliver insights in real time.

In this way, AI web applications transform into living ecosystems, learning from every interaction and shaping themselves around human intent. For businesses, this represents a shift from data accumulation to intelligent engagement, where every query, click, or interaction becomes an opportunity to refine understanding and deliver meaningful experiences.

Search used to be about retrieval. Now, it’s about understanding. Neural search redefines how people interact with digital information, turning every query into a conversation and every result into insight.

For product leaders and founders, integrating neural search isn’t a feature—it’s a strategy. It’s how you build platforms that feel intuitive, adaptive, and human. In an AI-based web application, neural search becomes the invisible architecture of intelligence: it learns, adapts, and aligns experiences with human intent. And that’s where the web is heading—not toward more data, but toward more meaning.

With deep expertise across AI web applications, mobile solutions, and enterprise systems, we at iProgrammer Solutions help businesses design experiences that combine usability with intelligence.

Our Web Application Development Services integrate modern frameworks, data engineering, and machine learning to deliver platforms that are fast, scalable, and future-ready. From neural search integration to AI-powered analytics, iProgrammer brings the technical maturity and design sensitivity needed to turn complex technology into meaningful user experiences. If your vision involves smarter, context-driven web applications, our team can help you build it, intelligently.